Part 06

Azure Load Balancer

An Azure Load Balancer is a fully managed service that provides high availability by distributing incoming network traffic across multiple servers or virtual machines (VMs). It helps ensure that your applications remain available, scalable, and fault-tolerant.

Types of Azure Load Balancer

Basic Load Balancer

- Designed for small-scale applications and services.

- Supports only one region and is ideal for development and test environments.

- Limited configuration options compared to Standard Load Balancer.

Standard Load Balancer

- More advanced, suitable for production environments.

- Supports multiple regions and larger, more complex workloads.

- Offers advanced features like health probes, NAT rules, and cross-region load balancing.

- Supports both internal and public load balancing.

Key Features of Azure Load Balancer

High Availability

- Distributes traffic across multiple VMs or services, ensuring that your application remains available even if one or more VMs become unhealthy or fail.

- Supports automatic rerouting of traffic in case of VM failure.

Health Probes

- Monitors the health of backend resources (e.g., VMs or applications) by sending periodic requests to check if they are responsive.

- Ensures that traffic is only routed to healthy resources.

Internal and External Load Balancing

- Public Load Balancer: Distributes traffic from the internet to VMs or services in a virtual network.

- Internal Load Balancer: Distributes traffic between VMs within a private virtual network, suitable for internal services.

Automatic Scaling

- Works with Virtual Machine Scale Sets (VMSS) to scale the application up or down based on demand, automatically adjusting the number of VMs in the pool.

Cross-Zone Load Balancing

- Distributes traffic across multiple Availability Zones to ensure better availability and fault tolerance.

Support for IPv6

- Azure Load Balancer supports IPv6 for both internal and public load balancing.

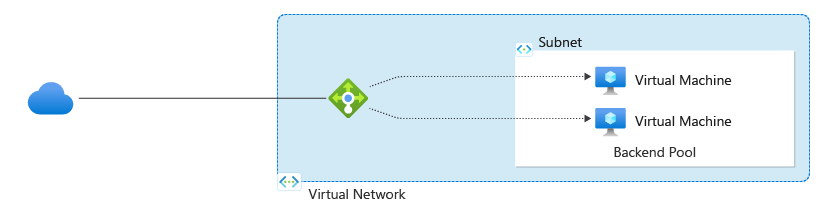

How Azure Load Balancer Works

- Azure Load Balancer uses Layer 4 (TCP/UDP) load balancing, meaning it works at the transport layer of the OSI model.

- It routes traffic based on the IP address and port to the appropriate backend VM or service.

- Traffic distribution is done using algorithms like Round Robin, Least Connections, or Source IP Affinity.

Use Cases for Azure Load Balancer

Web and Application Traffic Distribution

- Distribute user requests to multiple web servers or application instances, ensuring better resource utilization and faster response times.

Microservices Architecture

- Route traffic between different services within a microservices architecture, ensuring each service is scaled according to demand.

High Availability Services

- Ensure that mission-critical applications like databases, web servers, or API services remain available even if individual VMs or services fail.

Hybrid Applications

- Azure Load Balancer can be used to distribute traffic across both on-premises and Azure-based applications.

Azure Load Balancer: Basic vs. Standard

Azure Load Balancer provides high availability and load balancing for your applications. It is available in two tiers: Basic and Standard. Below is a comparison of the key features and differences:

1. Availability

| Feature | Basic Load Balancer | Standard Load Balancer |

|---|---|---|

| SLA | Not provided | 99.99% availability |

| Availability Zones | Not supported | Zone-redundant and zonal |

| Regional Balancing | Single region | Regional balancing supported |

2. Scalability

| Feature | Basic Load Balancer | Standard Load Balancer |

|---|---|---|

| Backend Pool Size | Up to 300 instances | Up to 1000 instances |

| Outbound Connections | Limited | Scalable |

| High Scalability | Limited | Optimized for large workloads |

3. Security

| Feature | Basic Load Balancer | Standard Load Balancer |

|---|---|---|

| Virtual Network Integration | Public IP only | Full VNet integration |

| NSG Association | Not supported | Supported |

| Backend Pool Connectivity | Unrestricted | Secure (VMs explicitly defined) |

4. Features

| Feature | Basic Load Balancer | Standard Load Balancer |

|---|---|---|

| Protocol Support | TCP/UDP | TCP/UDP |

| Health Probes | Basic | Enhanced |

| Cross-Zone Load Balancing | Not supported | Supported |

5. Cost

| Feature | Basic Load Balancer | Standard Load Balancer |

|---|---|---|

| Pricing Model | Free for basic features | Pay-as-you-go |

| Billing | No cost for data processing | Charges based on usage |

Key Recommendations

- Use Basic Load Balancer for simple, small-scale applications where cost is a primary concern, and high availability is not critical.

- Use Standard Load Balancer for production workloads requiring enhanced security, scalability, and reliability.

References:

LAB 01

LAB 02

Configuring Azure Load Balancer

Create Load Balancer:

- Go to Azure Portal, navigate to Load Balancers, and click Create.

- Choose Public or Internal based on your needs.

- Select your Resource Group, Region, and other basic configuration settings.

Set Up Backend Pool:

- Define the backend pool with the VMs or instances that will receive traffic.

- Choose the VMs manually or use a Virtual Machine Scale Set for automatic scaling.

Configure Health Probes:

- Create health probes to monitor the availability of backend VMs or services.

- Set parameters such as protocol, port, and interval for the probe.

Create Load Balancing Rules:

- Define rules that specify how traffic is distributed to backend VMs.

- Configure the ports, IPs, and protocols (e.g., HTTP, HTTPS, or custom TCP).

Review and Create:

- Review your settings and click Create to deploy the load balancer.

Azure Load Balancer helps ensure your services remain highly available and can scale as needed, delivering better performance and fault tolerance for your applications.

Azure Application Gateway

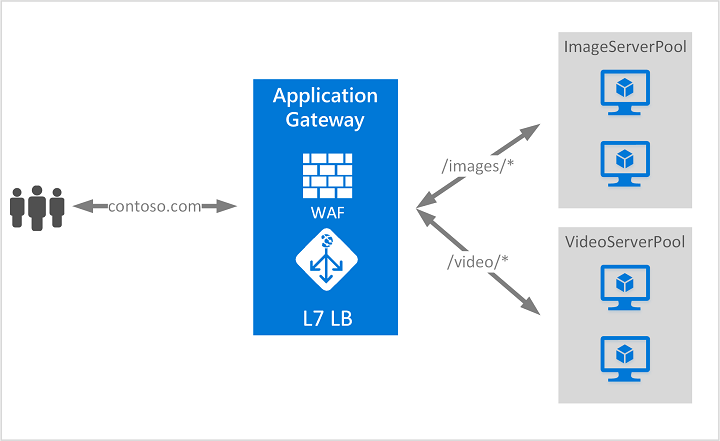

Azure Application Gateway is a web traffic load balancer that enables you to manage and route traffic based on advanced HTTP(S) routing rules. It operates at the Application Layer (Layer 7) of the OSI model, which allows for more granular control of web traffic compared to Layer 4 load balancers like the Azure Load Balancer.

Key Features of Azure Application Gateway

Layer 7 Load Balancing

- Unlike the Azure Load Balancer, which works at Layer 4 (TCP/UDP), Application Gateway works at Layer 7 (HTTP/HTTPS), allowing routing decisions based on URL paths, host headers, HTTP methods, and other content-based criteria.

URL-based Routing

- Application Gateway can route traffic based on the URL path, enabling you to route different types of traffic to different backend pools. For example:

/imagescan route to one pool of servers./apican route to another pool.

- Application Gateway can route traffic based on the URL path, enabling you to route different types of traffic to different backend pools. For example:

Host-based Routing

- Traffic can be routed based on the domain name (host) in the request. This is useful for multi-tenant applications or handling traffic for multiple websites hosted behind the same gateway.

SSL/TLS Termination

- Application Gateway can handle SSL/TLS termination (decryption of secure traffic). This offloads the decryption process from backend servers, improving their performance.

Web Application Firewall (WAF)

- Application Gateway integrates with Azure Web Application Firewall (WAF), providing centralized protection against common threats like SQL injection, cross-site scripting (XSS), and other OWASP top 10 vulnerabilities.

- WAF can be configured with custom rules for enhanced security.

Autoscaling

- Azure Application Gateway supports autoscaling, which adjusts the number of gateway instances based on the incoming traffic, ensuring that you always have the right amount of resources to handle the load.

Session Affinity (Cookie-based routing)

- Application Gateway can route requests from a particular user to the same backend server using session affinity (based on a cookie). This ensures that requests from the same user are consistently handled by the same backend for stateful applications.

Multi-site Hosting

- Supports hosting multiple websites on the same Application Gateway, routing traffic based on different URL paths or host headers.

Custom Health Probes

- Custom health probes are available to monitor the health of backend servers or services. The gateway will only route traffic to healthy resources, ensuring availability.

Redirection

- Azure Application Gateway allows you to redirect traffic from one URL to another. For example, you can configure it to redirect HTTP traffic to HTTPS.

How Azure Application Gateway Works

- Traffic Routing: When a request is sent to an Application Gateway, the gateway inspects the HTTP(S) request based on the configured routing rules. The traffic is then directed to the appropriate backend pool or service.

- Health Monitoring: The gateway constantly monitors the health of backend servers using health probes. If a server is unhealthy, the gateway reroutes traffic to a healthy instance.

- SSL Termination: Application Gateway decrypts incoming SSL/TLS traffic, inspects the traffic, and forwards it to the backend as unencrypted HTTP traffic. This improves backend server performance by offloading SSL processing.

- Firewall Protection: The WAF functionality inspects HTTP traffic for malicious patterns, blocking traffic that matches predefined rules for common web vulnerabilities.

Use Cases for Azure Application Gateway

Web Application Load Balancing

- Use Application Gateway to balance traffic across multiple web servers, improving performance and availability for web applications.

Secure Applications

- By enabling SSL termination, WAF, and custom routing rules, Application Gateway helps secure web applications from attacks while ensuring optimal performance.

Multi-Tenant Applications

- Host multiple websites or applications behind a single Application Gateway instance, using host-based or URL-based routing to direct traffic to the appropriate backend.

Microservices Architecture

- Direct traffic to different backend services or microservices based on URL paths, allowing you to implement complex routing logic for distributed applications.

Global Distribution and Traffic Optimization

- Application Gateway integrates with Azure Front Door to provide global routing and load balancing for applications that need to scale across multiple regions.

Configuring Azure Application Gateway

Create Application Gateway:

- Navigate to Azure Portal, search for Application Gateway, and click Create.

- Choose your Subscription, Resource Group, and Region.

- Provide a name and configure basic settings like SKU and virtual network.

Define Backend Pools:

- Add backend servers or services to your backend pools (e.g., Virtual Machines, VM Scale Sets, or Web Apps).

Set Up Routing Rules:

- Define URL-based or host-based routing rules to specify how traffic is distributed to your backend pools.

Configure Health Probes:

- Set up health probes to monitor the health of your backend services, ensuring only healthy instances receive traffic.

Enable SSL Termination:

- Upload your SSL certificates and configure SSL termination for secure communication with clients.

Configure Web Application Firewall (WAF):

- Enable and configure WAF to protect your application from common vulnerabilities and threats.

Review and Deploy:

- Review all your configurations and click Create to deploy the Application Gateway.

Azure Application Gateway provides intelligent load balancing, security features, and high availability for web applications, making it ideal for modern cloud-based web architectures. It ensures that applications are scalable, secure, and highly available while delivering fast, efficient traffic management.

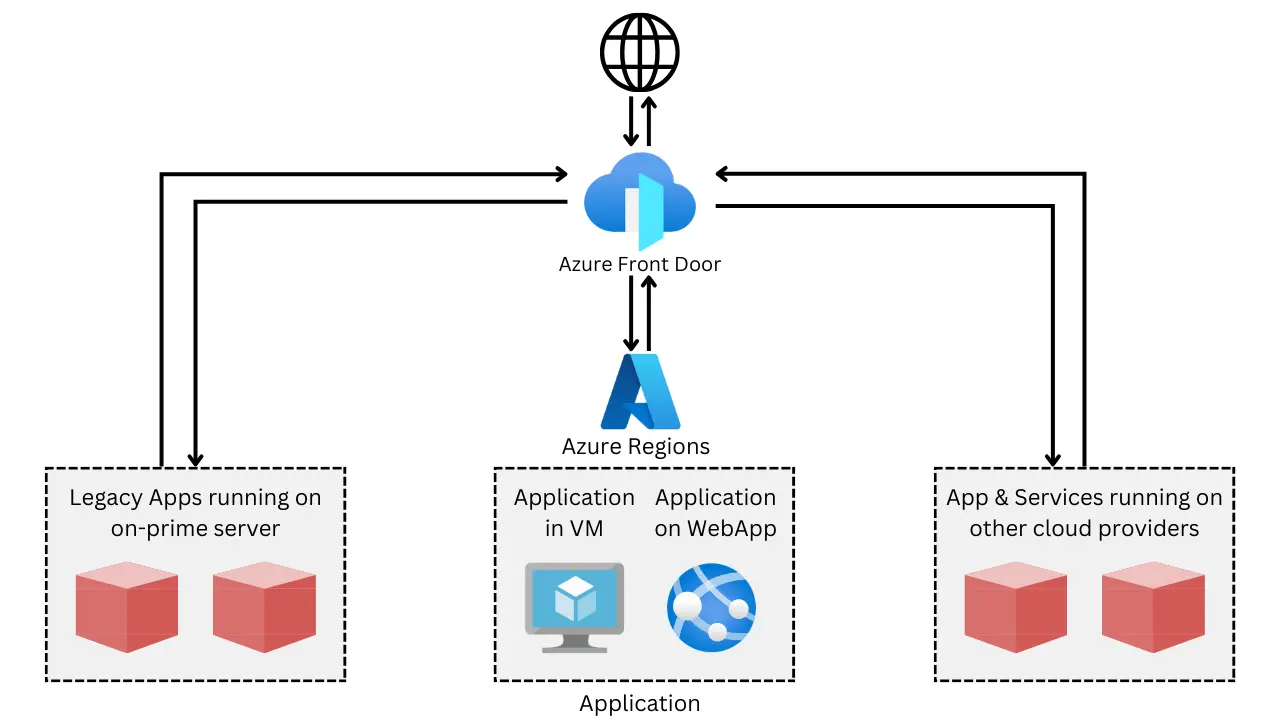

Azure Front Door

Azure Front Door is a global, scalable entry point that optimizes the delivery of your applications and services. It provides high availability and performance by using intelligent routing and various features like SSL offloading, web application firewall, and content caching.

Key Features of Azure Front Door

Global Load Balancing

- Azure Front Door provides global load balancing to route traffic to the nearest region based on factors like latency, geography, and availability.

- It ensures that users always connect to the nearest available region for optimal performance.

Web Application Firewall (WAF)

- Azure Front Door integrates with Web Application Firewall (WAF) to protect your applications from common threats and vulnerabilities, such as SQL injection, cross-site scripting (XSS), and bot attacks.

- It provides customizable security policies and automated rule sets for web traffic protection.

SSL Offloading

- It supports SSL/TLS termination, which offloads the encryption/decryption tasks from your backend services, improving performance and simplifying certificate management.

- Front Door automatically handles HTTPS traffic to secure your applications without burdening the origin server.

Customizable Routing

- Front Door supports intelligent routing based on user-defined rules, allowing traffic to be directed to specific backend pools based on URL paths, HTTP methods, and headers.

- It can route traffic to specific regions or services to optimize the user experience.

Global Caching

- Front Door caches static content closer to users at edge locations worldwide, improving response times for static content delivery.

- It reduces latency and helps improve the performance of content-heavy applications.

URL Rewrite and Redirects

- Azure Front Door enables flexible URL rewriting and redirection rules to customize how requests are processed and directed to the appropriate backend.

- You can modify incoming requests or responses without the need to alter your backend code.

Automatic Failover and High Availability

- Front Door provides automatic failover and traffic rerouting to ensure business continuity in case of backend failures or regional outages.

- It guarantees high availability by routing traffic to healthy backend endpoints.

How Azure Front Door Works

- Global Distribution: Azure Front Door uses a global network of edge locations to route user requests to the closest available backend.

- Routing Methods: It uses various routing techniques:

- Latency-based routing: Routes requests to the backend with the lowest latency.

- Priority-based routing: Routes requests based on a configured priority.

- Weighted routing: Routes traffic to multiple backends based on weighted percentages.

- Health Probes: Azure Front Door continuously monitors the health of backend services and automatically routes traffic to healthy instances, ensuring high availability.

Use Cases for Azure Front Door

Global Web Application Delivery

- Azure Front Door is ideal for delivering globally distributed web applications that need to serve a large number of users from different regions with minimal latency.

Multi-Region Application Architectures

- Distribute traffic intelligently across multiple Azure regions to improve availability and ensure disaster recovery, reducing downtime for critical applications.

Enhanced Security for Web Applications

- Protect applications from threats with integrated WAF, SSL offloading, and custom routing rules that enhance both security and performance.

High-Performance Content Delivery

- Cache static content closer to end users, reducing load on backend services and improving the user experience for high-traffic applications.

Load Balancing for Microservices

- Use Front Door to manage and balance traffic across multiple microservices deployed in different regions, ensuring seamless communication and fault tolerance.

Configuring Azure Front Door

Create Front Door Instance:

- Go to the Azure Portal, navigate to Front Door and click Create.

- Choose your Subscription, Resource Group, and Region.

- Provide a name for the Front Door instance and configure basic settings.

Define Backend Pools:

- Create backend pools with your services (e.g., VMs, web apps, or APIs).

- Configure load balancing settings for each backend to ensure optimal traffic distribution.

Set Routing Rules:

- Define routing rules that determine how traffic is directed to the backend pools.

- You can route based on URL paths, HTTP methods, and more.

Enable Web Application Firewall (WAF):

- Configure WAF policies to protect your application from common vulnerabilities and attacks.

Set Up SSL/TLS Offloading:

- Configure SSL certificates for Front Door to handle HTTPS traffic and offload SSL termination from backend servers.

Review and Deploy:

- Review your settings and click Create to deploy your Azure Front Door instance.

Azure Front Door is an effective solution for building highly available, secure, and performant web applications that serve users globally. It integrates load balancing, security features, and intelligent routing to provide a seamless experience across different regions and services.

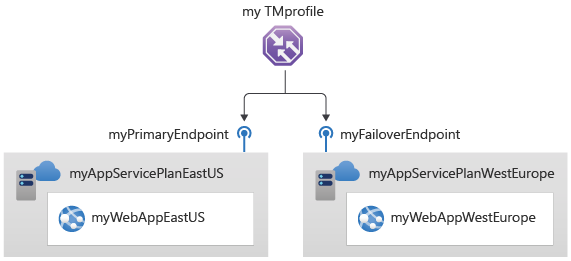

Azure Traffic Manager

Azure Traffic Manager is a global DNS-based traffic load balancer that enables you to distribute traffic to different endpoints across the world. It allows you to optimize the performance of your applications by directing user requests to the closest or most available instance based on configurable routing policies.

Key Features of Azure Traffic Manager

DNS-based Traffic Distribution

- Traffic Manager works by managing DNS requests. When a user attempts to access a service, it returns the DNS records of the most appropriate endpoint based on the routing method and health of the endpoints.

Multiple Routing Methods

- Azure Traffic Manager supports several routing methods that help optimize traffic flow to your resources:

- Priority-based Routing: Routes traffic to a primary endpoint and fails over to secondary endpoints based on their priority.

- Weighted Routing: Distributes traffic across multiple endpoints based on weights (percentage).

- Performance-based Routing: Directs traffic to the endpoint that offers the best performance (lowest latency) based on the user’s location.

- Geographic Routing: Routes traffic based on the geographical location of the user to comply with legal and regulatory requirements or to provide region-specific services.

- Multivalue Routing: Returns multiple healthy endpoints to clients, balancing traffic between them.

- Azure Traffic Manager supports several routing methods that help optimize traffic flow to your resources:

Endpoint Monitoring

- Traffic Manager regularly checks the health of your endpoints using health probes to ensure they are available and responsive.

- If an endpoint is determined to be unhealthy, Traffic Manager routes traffic to the next healthiest endpoint automatically.

Automatic Failover

- Traffic Manager provides high availability by routing traffic to healthy endpoints in the event of failures. This helps in creating a disaster recovery solution by balancing traffic between multiple regions or services.

Global Reach and Redundancy

- Traffic Manager enables global load balancing by allowing you to distribute traffic across Azure regions or even on-premises data centers, providing geographic redundancy for your applications.

Customizable TTL (Time-to-Live) Settings

- Traffic Manager allows you to configure the TTL for DNS records, controlling how frequently DNS information is refreshed across clients.

Integration with Other Azure Services

- Traffic Manager can be integrated with other Azure services like Azure App Services, Virtual Machines, VM Scale Sets, Azure Web Apps, and custom endpoints (on-premises or other cloud providers).

How Azure Traffic Manager Works

DNS Request Handling:

- When a user tries to access an application or service, a DNS request is made to Azure Traffic Manager.

- Traffic Manager returns the IP address of the most appropriate endpoint based on the routing method, such as the nearest endpoint or the one with the best performance.

Health Monitoring:

- Traffic Manager monitors the health of each endpoint through health probes.

- If an endpoint fails the health check, traffic is automatically redirected to the next available endpoint.

Traffic Routing:

- The routing decision is based on the routing method you’ve selected. For instance, with performance-based routing, traffic will be routed to the endpoint with the best performance for the user’s location.

Use Cases for Azure Traffic Manager

High Availability and Failover

- Use Traffic Manager to distribute traffic to multiple regional or global endpoints, ensuring that your application is highly available, with automatic failover in case of regional outages.

Geographically Distributed Applications

- When your users are located in different parts of the world, you can use Traffic Manager to route traffic to the nearest available service, improving performance and reducing latency.

Disaster Recovery

- Implement disaster recovery strategies by routing traffic to a secondary endpoint if the primary service becomes unavailable.

Traffic Optimization

- Use performance-based routing to direct traffic to the endpoint that provides the best response time, based on real-time latency measurements.

Regulatory Compliance

- Use geographic routing to direct users to the appropriate region to meet legal and regulatory requirements, such as data residency or locality laws.

Configuring Azure Traffic Manager

Create a Traffic Manager Profile:

- Go to the Azure Portal, search for Traffic Manager profiles, and click Create.

- Choose the Routing Method (e.g., Priority, Weighted, Performance, Geographic, or Multivalue).

- Configure a DNS name that will be used by Traffic Manager to route traffic.

Define Endpoints:

- Add the endpoints you want Traffic Manager to route to. These can be Azure services like App Services, Virtual Machines, or external endpoints such as on-premises servers or services outside of Azure.

Configure Health Probes:

- Set up health probes to check the availability and performance of your endpoints. You can define the protocol (HTTP/HTTPS) and the URL path for the health probe.

Define Routing Settings:

- Set weights for weighted routing or configure geographic settings for geographic routing.

- For performance-based routing, the system will automatically assess the best-performing endpoint for your users.

Monitor and Adjust:

- Traffic Manager allows you to monitor the health and performance of your endpoints in real-time.

- Make adjustments to routing methods, endpoints, and health probes based on the results.

Configure TTL:

- Set the TTL (Time-to-Live) for DNS responses to control how long clients cache the DNS information.

Review and Deploy:

- After setting up routing rules, health checks, and endpoint configurations, review your setup and click Create.

Azure Traffic Manager is ideal for improving the performance, availability, and resilience of your applications by intelligently distributing traffic across multiple endpoints. It allows for high flexibility, whether you are managing geographically distributed workloads, implementing disaster recovery, or optimizing performance.

Azure CDN (Content Delivery Network)

Azure Content Delivery Network (CDN) is a global network of servers that provides fast delivery of web content, such as HTML pages, images, videos, stylesheets, and scripts, by caching the content at strategically placed locations around the world. The main goal of Azure CDN is to reduce latency and improve the performance of your applications by serving content from a nearby location to the end user.

Key Features of Azure CDN

Global Reach

- Azure CDN has a vast network of servers distributed across many regions worldwide. This ensures that content is served from the closest edge node, reducing latency and improving performance for end users globally.

Caching and Content Delivery

- Azure CDN caches static content at edge nodes and serves it directly to users, offloading traffic from your origin server. This helps to reduce load times for frequently accessed content and lowers the load on the origin server.

Dynamic Site Acceleration

- While primarily focused on static content, Azure CDN also supports dynamic content acceleration, improving the performance of dynamic web pages by reducing latency and optimizing traffic routes between users and servers.

Custom Domain and SSL Support

- Azure CDN allows you to use your own custom domain for content delivery and supports SSL for secure communication between clients and the CDN edge servers.

Content Optimization

- Azure CDN can optimize content delivery by automatically compressing files (e.g., images, JavaScript, CSS) and using techniques such as caching headers and minification to improve load times.

Security Features

- HTTPS Support: Azure CDN supports HTTPS, ensuring that content is delivered securely.

- DDoS Protection: Azure’s global network can help mitigate distributed denial-of-service (DDoS) attacks by handling traffic spikes and ensuring that content is served without interruption.

- Token Authentication: Protect your content by using token-based authentication to restrict access to specific users or applications.

Analytics and Reporting

- Azure CDN provides detailed analytics and reporting on traffic, cache hit/miss ratios, content performance, and more, helping you monitor and optimize the delivery of your content.

Custom Cache Control

- With Azure CDN, you can configure cache settings to control how long content is cached at the edge nodes, and you can clear the cache when content is updated.

Multiple CDN Providers

- Azure offers different CDN providers, including Microsoft CDN, Verizon, and Akamai. You can choose a provider based on your needs for performance, regional presence, and features.

How Azure CDN Works

Request Routing:

- When a user requests content, Azure CDN directs the request to the nearest edge server based on geographic location, network conditions, and other factors.

Content Caching:

- The edge server checks if the requested content is already cached. If it is, the server delivers the cached content directly to the user. If it’s not cached, the CDN server fetches the content from the origin server and caches it for future requests.

Content Delivery:

- Once the content is cached at the edge server, subsequent users who request the same content will receive it from the edge server, reducing latency and improving performance.

Cache Expiration and Purge:

- Content in the cache is served for a specific duration (TTL - Time to Live) before it expires. After expiration, the CDN will fetch fresh content from the origin. You can also manually purge content from the cache if updates are required.

Use Cases for Azure CDN

Improved Website Performance

- Serve static website content like images, stylesheets, and scripts from the nearest CDN edge node, reducing load times and improving user experience.

Video Streaming

- Distribute media content such as videos and live streaming events to users across the world, providing high-quality, low-latency playback.

API Acceleration

- Accelerate the delivery of dynamic API responses, reducing latency for API-driven applications.

Global Web Applications

- Azure CDN is ideal for applications that are accessed by users globally. It reduces the time taken for requests to travel to the origin server by serving the content from nearby edge nodes.

Software Downloads

- Host software packages, patches, and updates on Azure CDN to ensure faster download speeds for users worldwide.

Configuring Azure CDN

Create a CDN Profile:

- Go to the Azure Portal, search for CDN, and click Create.

- Choose your Subscription, Resource Group, and Region.

- Define a CDN Profile and provide a name for the profile.

Choose CDN Provider:

- Select a CDN provider, such as Microsoft, Verizon, or Akamai, based on your needs for performance and global reach.

Create a CDN Endpoint:

- Add an Endpoint to the CDN profile, where you specify the origin (e.g., Azure Blob Storage, Web App, or custom origin).

- Set the custom domain (if using one) and configure SSL certificates if necessary.

Configure Caching Rules:

- Define caching rules to control how long content is cached at edge nodes. You can customize the cache duration for different content types or files.

Enable HTTPS:

- If necessary, enable HTTPS for secure content delivery. You can use an Azure-managed certificate or your own custom certificate.

Monitor Traffic and Performance:

- Use the Azure Portal to monitor CDN performance, cache hits/misses, traffic analytics, and other key metrics.

Clear or Refresh Cache:

- You can manually purge the cache or set up cache-control headers to refresh the content as needed.

Benefits of Using Azure CDN

Faster Content Delivery:

- By caching content at edge locations, Azure CDN reduces latency and ensures faster content delivery, improving user experience.

Scalability:

- Azure CDN is highly scalable and can handle large volumes of traffic without impacting performance, making it suitable for high-traffic websites and applications.

Global Performance Optimization:

- Azure CDN ensures that content is served from the closest edge server to the user, optimizing performance globally and reducing the burden on your origin servers.

Cost Savings:

- Offloading traffic to CDN reduces the load on your origin servers, which can lead to cost savings in infrastructure and bandwidth.

Improved Availability:

- With a global network of servers, Azure CDN improves the availability and reliability of content delivery, ensuring uptime even during traffic spikes.

Azure CDN is a powerful tool to improve the performance, scalability, and availability of web applications by caching content at strategic locations worldwide. Whether you’re delivering static content, streaming media, or optimizing APIs, Azure CDN enhances the user experience by reducing latency and increasing the speed of content delivery.

Azure Networking Services Comparison: Front Door vs. Load Balancer vs. Application Gateway vs. Traffic Manager

Azure provides multiple networking services tailored for different scenarios. Here’s a detailed comparison of Azure Front Door, Azure Load Balancer, Azure Application Gateway, and Azure Traffic Manager:

1. Purpose

| Service | Purpose |

|---|---|

| Azure Front Door | Global load balancing and accelerated delivery of web applications across regions. |

| Azure Load Balancer | Provides Layer 4 (TCP/UDP) load balancing within a region for high availability. |

| Azure Application Gateway | Layer 7 (HTTP/HTTPS) load balancing with advanced application delivery features. |

| Azure Traffic Manager | DNS-based global traffic routing to distribute requests across multiple regions. |

2. Layer of Operation

| Service | Layer in OSI Model |

|---|---|

| Azure Front Door | Layer 7 (HTTP/HTTPS) |

| Azure Load Balancer | Layer 4 (TCP/UDP) |

| Azure Application Gateway | Layer 7 (HTTP/HTTPS) |

| Azure Traffic Manager | DNS-level routing (not tied to a specific OSI layer) |

3. Key Features

| Service | Key Features |

|---|---|

| Azure Front Door | Global distribution, caching, WAF, TLS termination, session affinity, path-based routing. |

| Azure Load Balancer | Low latency, regional load balancing, zonal support, outbound NAT. |

| Azure Application Gateway | URL-based routing, WAF, SSL offloading, session affinity, autoscaling. |

| Azure Traffic Manager | Geographic-based routing, performance-based routing, failover, priority routing. |

4. Use Cases

| Service | Typical Use Cases |

|---|---|

| Azure Front Door | Global web application delivery, CDN integration, multi-region redundancy. |

| Azure Load Balancer | Distributing traffic across VMs, containers, or services within a region. |

| Azure Application Gateway | Web application firewall (WAF), load balancing for APIs, SSL offloading. |

| Azure Traffic Manager | Disaster recovery, routing to the nearest region, region-specific failover. |

5. Security

| Service | Security Features |

|---|---|

| Azure Front Door | Web Application Firewall (WAF), DDoS protection, TLS termination. |

| Azure Load Balancer | NSG support, but no built-in WAF or TLS termination. |

| Azure Application Gateway | Integrated WAF, TLS termination, and secure traffic handling. |

| Azure Traffic Manager | No built-in security features; relies on endpoints’ security measures. |

6. Global vs. Regional

| Service | Scope |

|---|---|

| Azure Front Door | Global |

| Azure Load Balancer | Regional |

| Azure Application Gateway | Regional |

| Azure Traffic Manager | Global |

7. Pricing

| Service | Pricing Model |

|---|---|

| Azure Front Door | Based on data transfer, requests, and WAF policies. |

| Azure Load Balancer | Pay-as-you-go for rules, processed data, and outbound traffic. |

| Azure Application Gateway | Based on instance size, capacity units, and WAF usage. |

| Azure Traffic Manager | Based on number of DNS queries processed. |

Choosing the Right Service

| Scenario | Recommended Service |

|---|---|

| Delivering web applications globally with caching and acceleration. | Azure Front Door |

| Balancing traffic between VMs or containers in a single region. | Azure Load Balancer |

| Routing traffic to specific paths or URLs with advanced Layer 7 capabilities. | Azure Application Gateway |

| Distributing traffic globally and routing based on proximity or performance. | Azure Traffic Manager |

References: